The Quest for Perfect Body Measurements

Let me take you on a journey through our fascinating (and sometimes frustrating!) exploration of body measurement technology. Trust me, what seemed like a straightforward problem turned into quite the adventure!

Our First Try: LiDAR Scanning (Spoiler: It Wasn't Perfect)

We started with what seemed like the obvious choice - LiDAR scanning. Here's what we did:

- Grabbed a body scan using LiDAR (cool, right?)

- Fed it through our fancy Python API

- Compared it with our reference model

The Plot Twist

Here's where things got interesting - while the 3D models looked amazing (seriously, they were beautiful), the actual measurements were... well, let's just say they weren't winning any accuracy contests. 🎯

Plan B: Computer Vision Magic

After our LiDAR adventure, we thought, "Hey, what about computer vision?"

What We Nailed

- Built an awesome model that could spot torsos and breasts (AI for the win!)

- Got it running smoothly in our app

What's Still on Our Plate

- Training our depth prediction model (it's like teaching a computer to see in 3D!)

- Hunting for more training data (turns out, you need A LOT)

- Fine-tuning everything to get those perfect measurements

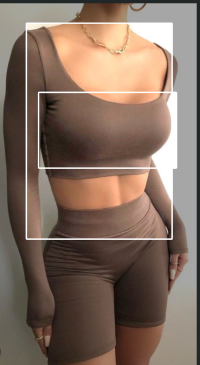

Sample Scan

Manual Point Measurement with ARKit

This approach involves manual point-to-point measurement using ARKit technology. The process requires:

- Manual point placement on the body

- Continuous point tracking during rotation

- Assistant support for measurement

Limitations

The primary challenge is that points remain static in space rather than tracking body movement, leading to inaccurate measurements. Additionally, this method requires a second person for operation.

Getting Artsy with Photogrammetry

Next up on our tech exploration journey: tweaking Apple's Object Capture tech. (Yes, the same tech that lets you scan your coffee mug in 3D! 📱)

The Setup (Sounds Simple, Right?)

- Phone needs to be upright (like you're taking a selfie)

- You need to see both the floor and the person (no floating bodies, please!)

- We added voice guidance (like your personal photography coach)

- The app takes burst photos while you do a little spin 🔄

Our Shopping List

-

Pre-scan Checks

- Making sure the lighting is just right (no vampire photos!)

- Checking if we can see the floor (it's more important than you'd think)

-

Voice Guidance System

- Like having a friendly robot assistant

- Tells you exactly when and where to move

-

The Behind-the-Scenes Magic

- Turning those photos into measurements

- Getting expert eyes on the results (because computers need fact-checking too)

Tech Nerdy Bits

- More photos = longer processing time

- But also: more photos = better accuracy

Check Out These Cool Scans!

Here's what happened when we scanned a simple object - pretty neat, but still not perfect

Here's what happened when we scanned a simple object - pretty neat, but still not perfect

And here's a human scan - definitely requires a lot of photos and still not perfect

And here's a human scan - definitely requires a lot of photos and still not perfect

The Plot Twist: Enter 3DLOOK

After months of experimenting (and quite a few coffee-fueled debugging sessions), we had our "aha" moment. Sometimes the best solution isn't building everything from scratch - it's knowing when to leverage existing expertise.

That's when we discovered 3DLOOK. Think of it as standing on the shoulders of giants instead of trying to build our own giant from scratch. This decision meant:

- Getting accurate measurements right out of the box

- Saving our dev team from more caffeine-induced coding marathons

- Delivering a reliable solution to our users (because that's what really matters!)

- Having time to focus on making other parts of our app awesome

Wrapping Up

After all that experimenting, we ended up going with 3DLOOK and honestly? Could we have built everything from scratch? Maybe! But this way was so much easier and got us where we needed to be way faster.

Plus, I got to play around with some really cool tech along the way - LiDAR, ARKit, photogrammetry - you name it! Definitely adding those to my toolbelt for future projects. 🛠️